Abbreviations

Acronym | Definition |

BE | Basic event (of a fault tree) |

BT | Bow-tie analysis |

EPOS-IP | European Plate Observing System - Implementation Phase (European project), https://www.epos-ip.org/ |

ERA | Environmental risk assessment |

ET | Event tree |

E(x) | Mean value of x |

FT | Fault tree |

HazMat | Hazardous materials |

HPP | Homogeneous Poisson process |

IAM | Integrated assessment modelling |

EPISODES Platform | Platform for Research into Anthropogenic Seismicity and other Anthropogenic Hazards, developed within IS-EPOS project |

Λ | Equivalent sample size |

MERGER | Simulator for Multi-hazard risk assessment in ExploRation/exploitation of GEoResources |

MHR | Multi-hazard risk |

PRM | Physical reliability model |

SD(x) | Standard deviation of x |

SHEER | Shale gas exploration and exploitation induced risks (European project) |

TCS | Thematic core service (in EPOS-IP project) |

TCS-AH | Anthropogenic hazards thematic core service, https://tcs.ah-epos.eu/ |

TE | Top event (in a fault tree) |

Introduction

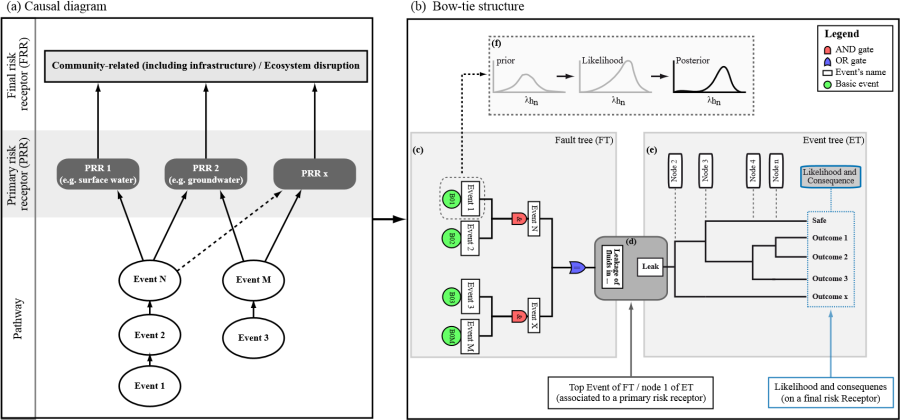

The implemented method for multi-hazard risk (MHR) assessment relies on the quantification of the likelihood and related consequences of identified risk pathway scenarios (e.g. Fig. 1a) structured using a bow-tie (BT, Fig. 1b) approach (e.g. Bedford and Cooke, 2001; Rausand and Høyland, 2004). The BT is widely used in reliability analysis and has been proposed for assessing risks in a number of geo-resource development applications, as for example in offshore oil and gas development (e.g. Khakzad et al, 2013, 2014; Yang et al, 2013) and for the mineral industry in general (e.g. Iannacchione, 2008).

Figure 1: (a) generic causal diagram used for qualitative structuring of a set of scenarios; (b) bow-tie structure for a determined scenario of interest (modified from Garcia-Aristizabal et al, 2017); (c) fault tree component of the bow-tie structure; (d) critical event linking the fault tree and the event tree; (e) event tree component of the bow-tie structure; (f) Bayesian inference of the parameters of the probabilistic models used to define basic events in fault trees and nodes in event trees.

The BT analysis, in particular, provides an adequate structure to perform detailed assessments of the probability of occurrence of events or chains of events in a given accident scenario. It is targeted to assess the causes and effects of specific critical events; it is composed of a fault tree (FT, Fig. 1c), which is set by identifying the possible events causing the critical or top event (TE, Fig. 1d), and an event tree (ET, Fig. 1e), which is set by identifying possible consequences associated with the occurrence of the defined TE (e.g. Rausand and Høyland, 2004). Therefore, in the BT structure, the top event of the FT constitutes the initiating event for an ET analysis.

The FT is a graphical representation of various combinations of basic events that lead to the occurrence of the undesirable critical situation defined as the TE (e.g. Bedford and Cooke, 2001). Starting with the TE, all possible ways for this event to occur are systematically deduced until the required level of detail is reached. Events whose causes have been further developed are intermediate events, and events that terminate branches are basic events (BE). The FT implementation is based on three assumptions: (1) events are binary events (do occur/ don’t occur); (2) basic events are statistically independent; and (3) relationships between events are represented by means of logical Boolean gates (mainly AND, OR). The probability of occurrence of the TE is calculated from the occurrence probabilities of the BEs.

The ET is an inductive analytic diagram in which an event is analysed using logical series of subsequent events or consequences. The overall goal of the ET analysis in this context is to determine the probability of possible consequences resulting from the occurrence of a determined initiating event. Moreover, most industrial systems include various barriers and safety functions that have been installed to stop the development of accidental events or to reduce their consequences; these elements should be considered in the consequence analysis.

The quantitative assessment of the scenarios implemented in a BT structure is based on the probabilities assigned to the basic events of the FT and to the nodes of the ET. In this work, the BT logic structure is coupled with a wide range of probabilistic tools that are flexible enough to make it possible to consider in the analyses different typologies of phenomena. Furthermore, since the risk scenarios associated with geo-resource development activities are likely to include events closely related to geological, hydrogeological, and geomechanical processes in underground rock formations and with limited access to direct measurements, alternative modelling mechanisms for retrieving reliable data need to be considered.

This document is a step-by-step guide for using the first release of the MERGER tool as implemented in the in the EPISODES Platform. At the current stage, the released version of MERGER includes only the fault tree solver (MERGER-FT), and for this reason this guide is focused only on this aprt of the system. The full MERGER system (which includes the MERGER-ET solver and therefore the tool for solving the full bow tie structure) will be soon released. A detailed technical decription of the model implemented in MERGER can be found in Garcia-Aristizabal et al (2019).

Step-by-step guide: a worked example

In this section, we briefly describe how to use the application according to its current state of development. This guide will be updated as soon as new releases and updated of the system become available. We highlight in red the descriptions that are related to functionalities not yet available in the EPISODES Platform.

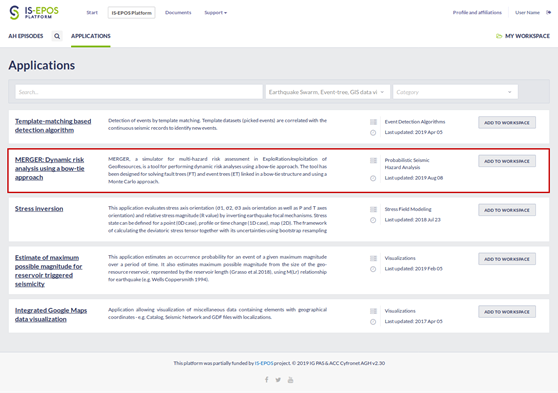

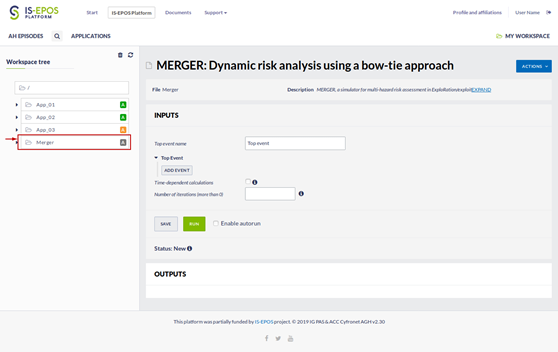

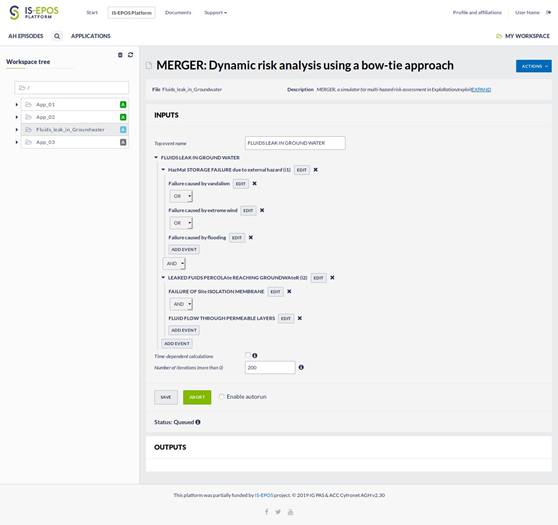

In the EPISODES Platform, MERGER is available to be used from the Applications menu (Fig. 2). To use any application from the platform it has to be first added to the user’s workspace (using the Add to workspace button; see the quick start guide EPISODES, 2018). Once the MERGER application is available in the Workspace, the user can open the application, which at this point is ready to be used. The loaded application looks as shown in Fig. 3. The first step of an assessment is to provide the input data required for quantitative analyses, which in the most general definition can be structured as: (a) the definition of the TE of interest; (b) input/analysis of the Fault Tree, and (c) input/analysis of the Event Tree. In the current release, only the FT component of the BT structure of MERGER has been integrated in the EPISODES Platform. For this reason here we focus on the description of the interface for the construction and analysis of the FT component.

Note: At the bottom of the main page of MERGER-FT, there are two buttons: Save and Run. Use the Save button while loading your data to avoid loosing your data if incidentally you get unlogged from the platform (e.g., because of a long period of inactivity). On the other hand, use the Run button to execute the software to evaluate the fault tree once you have finished and controlled the input data.

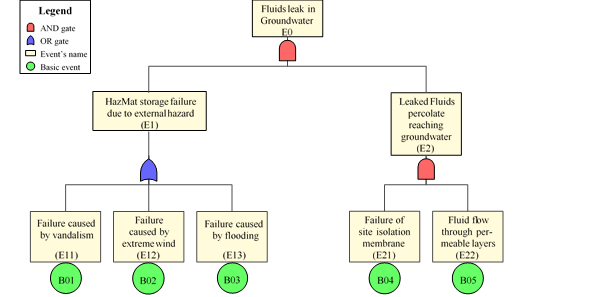

To illustrate the data input process in MERGER-FT, we will work out a very simple example related to a scenario of possible groundwater pollution caused by a surface spill related to the failure of a storage unit containing flowback fluids (from hydrauic fracturing operations) caused by the effect of external hazards. This example has been freely adapted from an analysis found in Garcia-Aristizabal et al (2019). Fig. 4 shows a simple FT for this example, which considers five BEs defined as shown in Table 1. It is assumed that an isolation (impermeable) membrane has been installed in the site to protect groundwater from on-site surface spills.

Figure 2: Example of a list of applications within the EPISODES Platform

Figure 3: View of the Fault tree input/analysis of MERGER (MERGER-FT) within EPISODES Platform after loading the tool in the workspace.

Figure 4: Fault tree for assessing the probability of HazMat fluids reaching a drinking groundwater layer associated with the failure of a storage unit containing flowback fluids

Preparing the data for input

For reference, here we briefly describe how to prepare the required input data for this simple example. More details regarding the procedure, models, and required model parameters can be found in Section 3 and in Garcia-Aristizabal et al (2019).

HazMat leakage caused by storage failure: setting B01, B02, and B03

The HazMat storage failure event (intermediate) is set by assuming that flowback fluids are stored in tanks that may fail under the effect of external hazards. For the sake of simplicity, in this example we consider as possible causes (a) vandalism, (b) extreme wind, and (c) flooding (Fig. 4). Failure rates associated to these causes are modelled as homogeneous Poisson processes. For the scope of the example presented in this guide, we set a single basic event (B04) aggregating all the failures, regardless of the cause.

Considering the Bayesian approach implemented in MERGER for modelling Poisson processes, it is necessary to set a prior state of knowledge (using e.g., generic data), and the likelihood function to encode the site-specific data available. To set the prior distribution for this BE, we use tank failure rates data published by Gould and Glossop (2000) and Pope-Reid Associates (1986). To calculate the probability of HazMat storage failure, we consider that a number Nc of storage containers contain flowback fluids in the site.

For example, according to the failure rates reported by Gould and Glossop (2000) and PopeReid Associates (1986), the rate of a catastrophic failure caused by vandalism is λf = 1×10−6 per vessel/year. Therefore, for Nc containers operating on-site, each of which can fail independently, E1(λ) can be set as: EB01(λ) = Ncλf = 1 × 10−6 (for Nc = 1).

The defined values are reported in Table 1. SDB01(λ) is the standard deviation assumed for the value assumed for the prior failure rate. To set the likelihood function, we assume that no fluid-container failures caused by vandalism have been recorded in the analysed case (i.e. rB01 = 0) in the observation period (tB01 = 1 year).

Table 1: Data used to set the prior and the likelihood distributions of the BEs defined for the scenario associated with truck accidents (Poisson and binomial models)

Code | Model | Params. prior distribution | Parameters of the Likelihood (description) | |

| B01 | Poisson | EB01(λ) = 1.0 × 10−6 SDB01(λ) = 0.2 × 10−6 | rB01 = 0 tB01 = 1 | (Number of failures caused by vandalism, in tB01 years) (Years of operation) |

| B02 | Poisson | EB02(λ) = 1.5 × 10−4 SDB02(λ) = 0.3 × 10−4 | rB02 = 0 tB02 = 1 | (Number of failures caused by extreme wind, in tB02 years) (Years of operation) |

| B03 | Poisson | EB03(λ) = 5 × 10−3 SDB03(λ) = 2 × 10−3 | rB03 = 0 tB03 = 1 | (Number of failures caused by flooding, in tB03 years) (Years of operation) |

B04 | Poisson | EB04(λ) = 1.0 × 10−4 SDB04(λ) = 0.2 × 10−4 | rB04 = 0 tB04 = 1 | (Number of membrane isolation failures in tB04 years) (Years of operation) |

B06 | Binomial | θB05 = 1.5 × 10−3 ΛB05 ≥ 1 × 102 (set to 1000 in this example) | rB05 = 0 nB05 = 0 | (Number of times that leaked fluids reach groundwater in the project) (Total number of HazMat leaks) |

Failure of the isolation membrane (B04)

Regarding the basic event B04, the failure of the isolation membrane is also modelled as a homogeneous Poisson process. To our knowledge, no data regarding the failure rates of a plastic membrane installed for site isolation in this kind of applications is available in literature; therefore, for the sake of the example presented in this paper, the prior distribution is set by assuming arbitrarily a generic failure rate value as shown in Table 1. To set the likelihood function, it is assumed that no membrane failures have been detected during the time of project operations (that is, rB04 = 0 and tB04 = 1 year, see Table 1).

Leaked fluids percolate reaching groundwater (B06)

The B05 basic event is implemented using the binomial model. In this case, the prior information can be set using, for example, integrated assessment modelling (IAM) to assess the probability that fluids from a surface spill can flow through a porous media and reach the groundwater level. The definition of such IAM is out of the scope of this guide and, therefore, for the sake of this example, this value is arbitrarily assumed (see Table 1). Regarding the likelihood function, the data for this case study indicates that no failure leaks have occurred during the operations; therefore, the likelihood function is set by defining a zero number of leaks reaching groundwater (rB05 = 0) out of zero HazMat leaks caused by tank storage failures during operations (i.e. nB05 = 0, see Table 1).

Loading the data in MERGER-FT

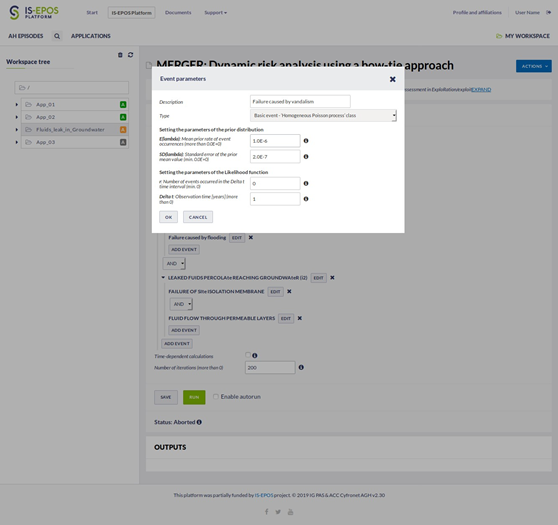

Once the input data is ready, both the FT and the data for setting the basic events can be loaded into MERGER-FT. This process is performed following a Top-Bottom approach: first, we define the Top Event of the FT, and layer by layer, we create all the intermediate events until arriving to the basic events. To add an event just clock the ADD EVENT button. A box opens to input the data. Selecting the Type of event, you can choose between setting an intermediate event or a Basic Event of a given class (i.e., Homogeneous Poisson, Binomial, Weibull). Fig. 5 shows an example of setting the B01 basic event (Failure caused by vandalism) using the data in Table 1, which is modelled as a Poisson process.

Figure 5: Example of data input: Setting a node modelled as Homogeneous Poisson process.

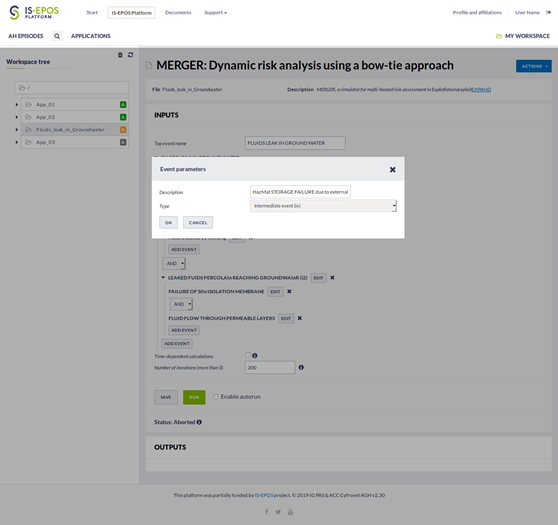

Fig. 6 shows the input box when creating an intermediate event. In this case, the system expects the input of the events linked to define that specific intermediate event. In this example, as can be seen in the FT diagram shown in Fig. 4, the created intermediate results from linking three basic events (i.e., B01, B02, and B03) linked by an OR gate. Once the events defining a given level of the FT are created, it is possible to define the gate linking them (e.g., AND, OR). This operation can be done by selecting the gate from the pertinent drop-down menu located in-between the linked events.

Figure 6: Data input: creating an intermediate event.

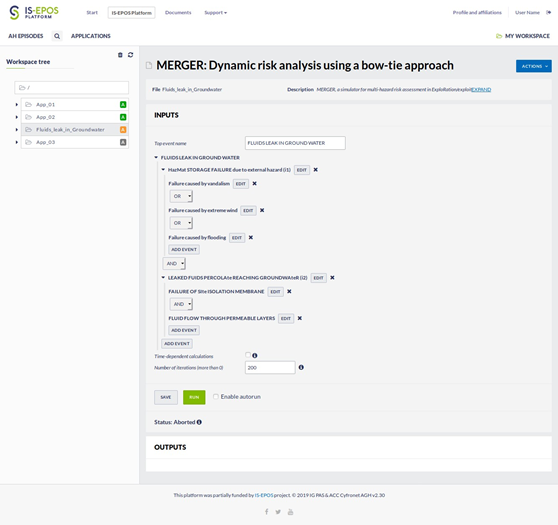

Once all the basic events defined for this example have been created, and the gates linking the basic and/or intermediate events have been defined, the FT is ready to be assessed. The resulting FT for the worked example is presented in Fig. 7.

The last parameter to be set is the Number of Iterations; this is the number of times that the full FT will be assessed by sampling the distributions characterizing the BEs. In this example we set it to 200 (see Fig. 7).

Figure 7: Data input: The fault tree shown in Fig. 4 created in the MERGER-FT application.

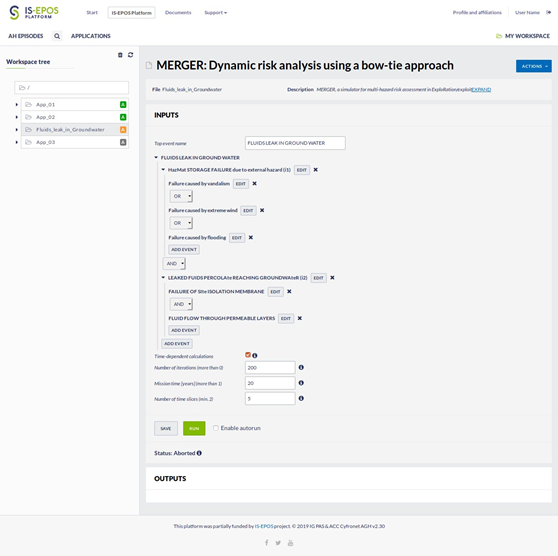

Furthermore, in the MERGER-FT main screen there is also a check box called Time-dependent calculations. leave this box unchecked unless you are performing time dependent calculations (e.g., by defining a Weibull process to describe, for example, wearing or aging processes). In such a case, once you activate this check box, the following two additional parameters need to be defined (Fig. 8):

- Mission time (years): is the time window for which time-dependent calculations need to be computed (e.g., 20 years)

- Number of time slices: Outputs of time-dependent calculations will be provided in two ways: (a) data and histograms at different times, according to the number of time points set in this parameter (e.g., setting this parameter to 5, it means that 5 time intervals between the current time (t0 = 0) and the mission time (e.g., tend =Mission time)); (b) a plot of the evolution with time of the probability (or frequency) of each intermediate event as well as for the top event.

Figure 8: Data input: Extra input data required when it is required to perform time-dependent calculations.

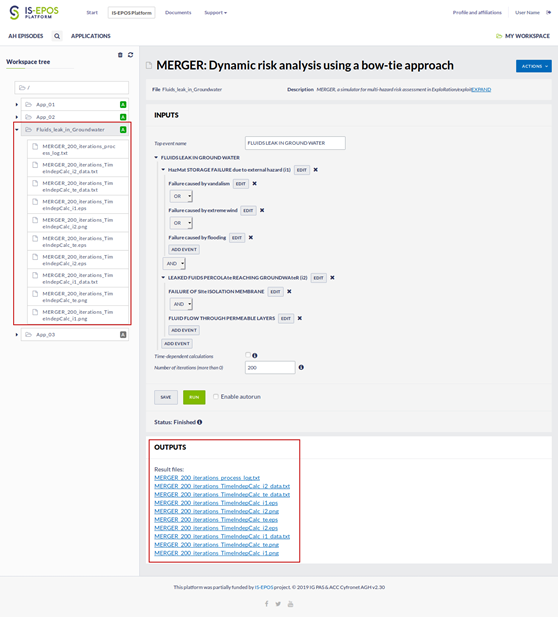

After specifying the FT inputs and running the application (the button Run), the MERGER software is executed on distributed computing resources (Fig. 9). While the system is running, the text in the green buttom changes from Run to Abort. Pressing Abort will stop the process and no output data will be provided.

Figure 9: MERGER-FT Running. Wait until the results are obtained and made available in the workspace.

The computation itself may take some time, depending on the complexity of the fault tree and on the number of iterations supplied as one of the input parameters. However, the application run is asynchronous; therefore, the results will be saved even if the user is not logged-in to the EPISODES Platform. This is a very important feature, especially when analysing large, complex problems, because in the case of computationally expensive analyses, the user can launch calculations, log-off of the system, and retrieve the results after some time by logging-in again into the platform.

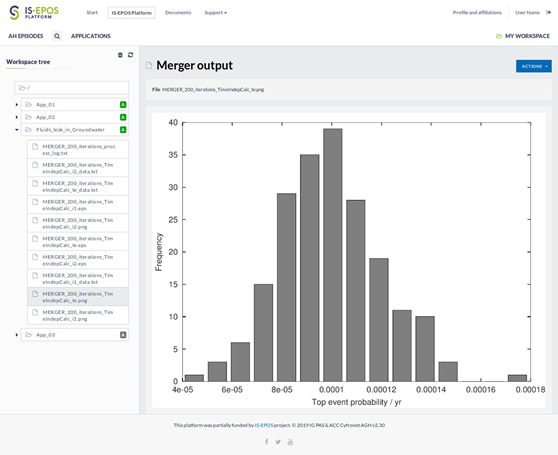

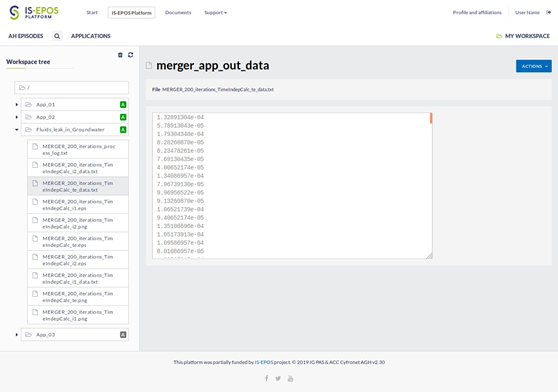

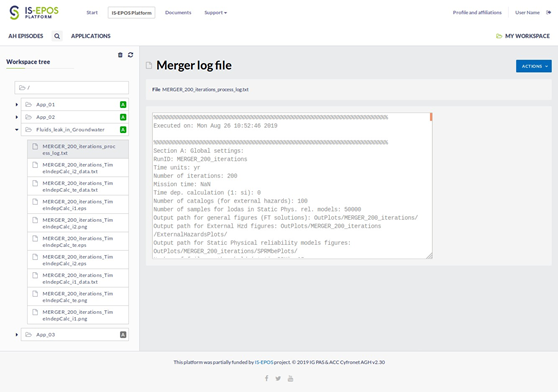

After the computation is finished, the results of the application are saved to the user’s Workspace (see, e.g. Fig. 10) and are available to be displayed or downloaded. The output consists on the data (text ascii file) and histograms (figures in flat [.png] and vector [.eps] formats) for each intermediate event (identified as ie) and the top event (identified as te). Clicking on a figure (.png format) shows the relative plot in the workspace (see, e.g. Fig. 11). Downloading the data files (* data.txt) the user can analyse and plot the results by his/her own (see, e.g. Fig. 12). A log file is also written in the workspace (MERGER log file); this file contains a summary of the input data as well as summary statistics of the probabilities (or frequencies) of both intermediate and top events (see, e.g. Fig. 13).

To download any file, just select the file (which will display it in the workspace) and use the button located in the top-right corner of the screen (ACTIONS button), and choose the Download option from the menu.

Figure 10: Screenshot of the MERGER-FT output data: List of files created after finishing the program execution.

Figure 11: Screenshot of the MERGER-FT output data: Plots generated in vector format (EPS) and as flat images (PNG).

Figure 12: Screenshot of the MERGER-FT output data: text files contain the output data (samples of the top event and intermediate events, in separated files). The number of samples equals the number of iterations set as input.

Figure 13: MERGER log file: A log file, containing a summary of input setting and results, is saved as a text file.

MERGER in a nutshell

In this section we briefly present the probabilistic model implemented in MERGER. A more detailed description of the model and the implemented system can be found in Garcia-Aristizabal et al (2019).

The MERGER-FT in a nutshell

The quantitative assessment of the scenarios implemented in a BT structure is based on the probabilistic models assigned to the basic events of the FT and to the nodes of the ET. In this section we focus our attention in the process for data input for the fault tree analysis (hereinafter called MERGER-FT).

The MHR assessment approach in MERGER considers the following five classes of probabilistic models for implementing the stochastic characteristics of FT’s basic events:

- Homogeneous Poisson process;

- Binomial model;

- Weibull model;

- Static physical reliability models;

- Dynamic physical reliability models;

The current version implemented in the EPISODES Platform includes the first three probabilistic models. The last two models will be released in a next release. The Homogeneous Poisson process and the Binomial model were implemented using Bayesian data analysis techniques. In such cases, a prior state of knowledge can be set using generic information as, for example, data from similar cases, the use of integrated assessment modelling, and/or by the elicitation of experts. The probabilities obtained from the initial generic data can be then updated using the Bayes’ theorem (through a likelihood function) as site-specific new data becomes available.

In this section, we briefly indicate the main features of the implemented models, as well as the input/output parameters required for defining a given BE according to these models. A detailed description of the mathematical background is presented in Garcia-Aristizabal et al (2019).

Homogeneous Poisson process (HPP)

A constant event rate implies that events are generated by a Poisson process. In this case, the inference problem is to estimate the rate of event occurrence (λ) per time unit. For simplicity, we adopt the conjugate pair Poisson likelihood / gamma prior (e.g. Gelman et al, 1995), which is one of the most frequent models used in risk assessment applications (e.g. Siu and Kelly, 1998). A prior distribution for λ can be developed from other generic data (as, e.g. data from similar cases or components, or from expert opinion elicitation).

The prior state of knowledge can be defined using the actual analyst’s knowledge of a best prior estimate of the rate [which is set as the mean prior value, E(λ)] and a standard deviation, SD(λ), as a measure of the uncertainty in the prior best value. These two estimates are then used for setting the parameters of the gamma prior distribution (for details see Garcia-Aristizabal et al (2019)). The (Poisson) likelihood function is set for encoding the site-specific data which, for a HPP is basically the number of events r occurred in a time interval ∆t = [0,t]. Table 2 summarises the data required for defining a basic event as a homogeneous Poisson process.

Once the posterior distribution for λ, π1(λ|E), has been calculated, samples of λ are drawn from the posterior distribution and used to calculate the probability of at least one event occurring in a determined period of time of interest.

Table 2: Parameters required for setting a basic event of class HPP

Element | Parameter | Description |

Prior distribution | E(λ), [0 ≥ E(λ) ≥ 1] SD(λ) | Prior assumption for the mean value for the rate of event occurrences (lambda) Standard deviation of the prior mean value |

Likelihood function | r, (r ≥ 0) ∆t, (∆t > 0) | Number of events occurred in a time interval ∆t Observation time (in years) |

Binomial model

The standard solution for events occurring out of a number of trials uses the binomial distribution (e.g. Siu and Kelly, 1998). This model assumes that the probability φ of observing r events (e.g. failures) in n trials is independent of the order in which successes and failures occur. The inference problem in this case is to estimate the value of the φ parameter of the binomial distribution, which may be uncertain due, for example, to a low number of trials. If φ is uncertain, then we can define a probability distribution for φ. For simplicity, in this case we adopt also the conjugate pair binomial likelihood / beta prior (another frequent model used in risk assessment applications).

r and n are site-specific observations and the input data required for setting the (binomial) likelihood function. On the other hand, the prior beta distribution is characterised by two parameters (α and β) whose definition might not be intuitive. Therefore, to set the model parameters of the prior distribution, we make use of indirect measures that can be more easily defined by an analyst. In practice, we identify an average value as the best prior estimate of the φ parameter and a measure of the degree of uncertainty related to that estimate. To define the degree of uncertainty in the best-estimate value we use the so-called equivalent sample size (or equivalent number of data), Λ, with Λ > 0, as defined in Marzocchi et al (2008) and Selva and Sandri (2013). Λ can be interpreted as the quantity of data that the analyst expects to have at hand in order to modify a prior belief regarding the value of the φ parameter. It means that the larger Λ, the more confident the analyst is about the prior state of knowledge. For example, setting Λ = 1 the analyst is expressing a maximum uncertainty condition, implying that just one singe observation can substantially modify the prior state of knowledge.

It is worth noting here that the definition of θ and Λ needs to be consistent. For example, if a prior belief regarding a given event indicates a prior value of θ in the order of 1 × 10−6, it means that the analyst is quite confident that the event’s probability is very low (in other words, there is low epistemic uncertainty regarding this parameter); in such a case, a high Λ value is required to reflect the low epistemic uncertainty regarding this prior belief (i.e. setting, e.g. Λ = 1 in such a case would be inconsistent with that prior belief). It is difficult to define a rule of thumb for setting θ and Λ; indicatively, we can assume that the absolute value of the order of magnitude of θ provides a rough indication about the order of magnitude of the equivalent sample size (or trials) required for obtaining such estimation. Therefore, for a consistent definition of Λ we can consider, in general, that extreme (high or low) θ values usually imply high confidence (i.e. event is very likely or very unlikely) and therefore a high value of Λ.

Table 3 summarises the data required for defining a binomial basic event. Once the posterior distribution for φ, π1(φ|E), has been calculated (for details see Garcia-Aristizabal et al (2019)), samples of φ values are drawn from the posterior distribution to define the probability of a binomial BE.

Table 3: Parameters required for setting a binomial basic event

Element | Parameter | Description |

Prior distribution | θ, [0 ≥ θ ≥ 1] Λ, (Λ > 0) | Prior assumption for the mean value for the event probability (e.g. failure) Equivalent sample size required for modifying a prior belief |

Likelihood function | r, (r ≥ 0) n, (n ≥ 0) | Number of events (e.g. failures), out of n trials Number of trials performed |

The Weibull model

The Weibull distribution has been identified as one of the most useful distributions for modelling and analysing lifetime data in different areas as engineering, geosciences, biology, and other fields (see, e.g. Garcia-Aristizabal et al, 2012). In this approach, the Weibull distribution is used for describing systems with a time-dependent hazard rate in which the probability of an event occurrence is dependent on the time passed from the last event.

The Weibull distribution is characterised by two parameters (λ > 0 and k > 0) and is defined for positive real numbers (e.g. Leemis, 2009). The mathematical description of this model is presented in Garcia-Aristizabal et al (2019). k determines the time-dependent behaviour of the hazard rate: for k < 1, the hazard rate decreases with time; for k = 1, the hazard rate is constant with time (equivalent to a homogeneous Poisson process), while for k > 1, the hazard rate increases with time (i.e. as in an ageing or wearing process). Given the typology of applications in which the MHR model is applied here, the cases of main interest are those for which k ≥ 1.

In the MHR approach presented in this paper, the Weibull model is used to calculate the conditional probability that an event happens in a time interval (x,x,+∆t), given an interval of x = (τ − τL) years since the occurrence of the previous event, where τ the current time of the assessment and τL the time form the last event.

The definition of a BE using the Weibull model in the MHR approach presented in this paper requires setting five parameters, as described in Table 4; beyond the values of the two parameters of the distribution (λ,k), the uncertainties in the model parameter values are also required. Likewise, since this model is used for including processes with a time-dependent hazard rate, it is also necessary to set To, the time passed since the last event (or, in the case of modelling an element’s wearing/ageing, it the time that the element has been operating).

Table 4: Parameters required for setting a basic event of Weibull class

Parameter | Description |

λ, (λ > 0) | Scale parameter (best estimate, determined, e.g. by the max. likelihood method) |

δλ, (δλ > 0) | The error (standard deviation) of the scale parameter value |

k, (k ≥ 0) | Shape parameter (best estimate, determined, e.g. by the max. likelihood method) |

| δk, (δk ≥ 0) | The error (standard deviation) of the shape parameter value |

To, (To ≥ 0) | Time (in years) passed from last event. In the case of modelling an |

Considering external perturbations through physical reliability models

Note: This utility is not available yet. You can skip this section for the moment.

The physical reliability models (PRM) aim to explain the probability (or the rate) of event occurrences (as e.g. hazardous events and/or system failures) as a function of operational physical parameters (e.g. Dasgupta and Pecht, 1991; Ebeling, 1997; Melchers, 1999; Hall and Strutt, 2003; Khakzad et al, 2012). PRM are often used in reliability analysis for describing degradation and failure processes of both mechanical and electronic components (Carter, 1986; Ebeling, 1997).

We consider that this typology of modelling approach may be of particular interest for MHR assessments applied to geo-resource development activities because they allow to introduce in the analysis (1) simple cases of expected damage to system’s elements caused by generic loads (as, e.g. external hazards as earthquakes, or extreme meteorological events), and (2) to consider operational parameters as covariates in the process of modelling event rate occurrences or probabilities. A number of physical reliability models have been proposed in reliability analysis; for the approach presented in this paper, we are particularly interested in implementing two typologies of PRM: (1) static and (2) dynamic PRM.

Static PRMs - We implement Static PRM as a basic template for assessing damage probabilities of elements exposed to generic loads (e.g. external hazards). This typology of models has been widely used in risk assessments associated with natural hazards, mostly in the field of seismic risk analysis (e.g. EERI Committee on Seismic Risk, 1989, among many others).

For a generic conceptual description of the static PRM implemented in this work, we take as reference the random shock-loading model (e.g. Ebeling, 1997; Hall and Strutt, 2003). This is a simple model in which it is assumed that a variable stress load L is applied, at random times, to an element (e.g. a system’s component or infrastructural element) which has a determined capacity to support that load (hereinafter called strength). Stresses are, in general, physical or chemical parameters affecting the component’s operation. In probabilistic hazard assessment, such stresses are often called intensity measures (e.g. Burby, 1998). On the other hand, the strength is defined as the highest amount of stress that the component can bear without reaching a determined damage state (which can be defined at different degrees of criticality, as for example a failure, a moderate damage, etc).

A distribution function of the intensity measure (stress), associated with reaching a given damage state, is what in the risk assessment practice is usually called as a fragility function (e.g. Kennedy et al, 1980). According to this basic model, a given damage state (e.g. failure) occurs when the stress on the component exceeds its strength (e.g. Hall and Strutt, 2003). The mathematical description of the implemented model is presented in Garcia-Aristizabal et al (2019). Stress and strength can be constant or considered as random variables having known probability distribution functions.

Dynamic PRMs - Dynamic PRMs aim to explain the event occurrence (e.g. the failure of a component) as a multivariate function of operational physical parameters (e.g. Ebeling, 1997; Khakzad et al, 2012). Operational physical parameters that can be used as covariates may include, among others, temperature, velocity, pressure, vibration amplitude, fluid injection rates, etc. The dynamic PRMs are considered in this approach assuming that it is possible to identify either:

- A relationship between operational or external parameters of interest and the rate of occurrence of events stressing the system (i.e. at the hazard level); or,

- A relationship between operational or external parameters and the strength of components of interest (i.e. at the vulnerability level).

The mathematical description of the implemented model is presented in Garcia-Aristizabal et al (2019). In the first case (i.e. covariates linked to the stress component), we assume that the rate of occurrence of the loading process (hazard) may be modulated as a function of one or more covariates of interest. Such a model is implemented by defining a probability distribution for modelling the rate of the loading process, and the parameters of that distribution are allowed to change as a function of the selected covariates of interest. Examples of hazard-related covariate model implementations of interest for MHR assessments are the covariate approaches for modelling time-dependent extreme events (e.g. Garcia-Aristizabal et al, 2015), and the model for assessing induced seismicity rates as a function of the rate at which fluids are injected underground (GarciaAristizabal, 2018).

In the second case (i.e. covariates linked to the strength component), the covariates are linked to the parameters of the distribution used to model the probability of reaching a determined damage state (e.g. failure) of an element of interest. Examples of models for performing analysis in this case have been presented, for example, by Hall and Strutt (2003) and Khakzad et al (2012).

The implementation of these models into the MHR approach can be done according to the following general procedure (a detailed example of a specific implementation can be found in Garcia-Aristizabal, 2018):

- Identification of informative variables that can be correlated with the rate of occurrence of determined events of interest.

- Identification of a probability distribution to be used as a basic template for describing the process under analysis.

- Inference of the parameter values of competing deterministic models relating the parameter(s) of the selected template distribution and the covariate(s) of interest, as well as the definition of an objective procedure for model selection.

- Testing the performance of the selected model by comparing model forecasts with actual observations.

Once the model has been calibrated and tested, it can be used to calculate the probability of the BE of interest as a function of the values taken by the selected covariates.

Table 5: Parameters required for setting the multinomial model

Element | Parameter | Description |

n | number of possible mutually exclusive and exhaustive events in a determined node of the ET | |

Prior distribution | θi (0 ≥ θi ≥ 1) Λ (Λ > 0) | Prior assumption for the mean value for the ith event probability (i = 1,...,n) equivalent sample size required for modifying a prior belief |

Likelihood function | y =(y1,...,yn), (yi ≥ 0) | Data vector (yi); number of successes relative to the ith event |

MERGER-ET in a nutshell

Note: This utility is not available yet. You can skip this section for the moment.

In this section, we focus the attention on the probabilistic tools implemented for modelling nodes in the event tree part of the BT structure. ET nodes are often defined as binary situations characterised by two possible outcomes (e.g. yes/no, works/fails, etc.); in such cases, event probabilities are defined using the binomial model described in the previous section. Nevertheless, when constructing an ET for assessing consequences it is often required to set nodes with more than two mutually exclusive events. For example, if the starting event of an ET is the leakage of certain hazardous material (HazMat) on surface water, the subsequent node of the ET can be set to assess the probability that the leaked volume is large, medium, or small (according e.g. to some pre-defined thresholds).

To set event probabilities in such cases, we implement the multinomial model, that is a generalisation of the binomial case. It can be set for cases in which there are n possible mutually exclusive and exhaustive events at the ET’s node, each event with probability φi (where, for a given node,

We perform Bayesian inference of the φi parameters, adopting for simplicity the conjugate pair multinomial likelihood / Dirichlet prior (e.g. Gelman et al, 1995). The mathematical definition of the multinomial model can be found in Garcia-Aristizabal et al (2019).

The parameters required for setting the multinomial model are summarised in Table 5. To set the model parameters of the prior distribution we follow a similar approach as the one used for the binomial model; for the analyst it is usually easier to set an average value as the best prior estimate of each parameter (i.e. the probability φi for the ith event) and to define a degree of confidence on such estimate. Therefore, the parameters of the Dirichlet prior distribution are set adopting an approach analogue to the one presented for the beta distribution (Marzocchi et al, 2008), in which it is possible set the prior state of knowledge by defining (1) a vector of n best estimate values, θi, and (2) an estimate of the uncertainty associated with these prior estimations using the equivalent sample size, Λ (which, as defined, is a number representing the quantity of data that the analyst expects to have in order to modify the prior values).

FT and ET evaluation using Monte Carlo simulations

A BT structure is quantitatively assessed by using the probability data from the BEs of the FT and the nodes of the ET. Large and complex BTs require the aid of analytic- or simulated-based methods for evaluation (e.g. Ferdous et al, 2007; Rao et al, 2009; Yevkin, 2010; Taheriyoun and Moradinejad, 2014).

We use Monte Carlo simulations for evaluating the FT and ET components of the risk pathway scenarios structured in a BT approach. The system is structured as follows: first, the FT is solved using Monte Carlo simulations by sampling the probability distributions defined for each BE. In this way, we obtain an empirical distribution for the probability of the critical top event of the FT. Empirical distributions of intermediate events of interest are also provided as an intermediate output.

Second, the empirical distribution obtained for the TE’s probability is assigned to the initial node of the related ET, and the outcome of the ET is also assessed using Monte Carlo simulations. The algorithms implemented for solving the FT and ET components of the BT structure are described in Garcia-Aristizabal et al (2019).

Integrated assessment modelling

Many of the events of interest for MHR assessments in geo-resource development projects are rare events that, by definition, are characterised by very low occurrence probabilities. Contrary to what usually happens with pure industrial applications, many risk pathways associated with georesource development activities involve elements intrinsically related to features of underground geological formations (as, e.g. rock fracture connectivity, fluid flow through porous media, pore pressure perturbations, induced seismicity, etc.) for which direct measurements may be very limited or even unavailable. It is for this reason that we explore alternative sources of information for retrieving useful data for setting a prior state of knowledge for a determined BE, intermediate event or node in the BT structure.

Integrated assessment modelling (IAM) is a tool used for tracking complex problems in which obtaining information from direct observations or measurements is challenging. IAM has been widely used, for example, for the implementation of climate policies that require the best possible understanding the potential impacts of climate change under different anthropogenic emission scenarios (see, e.g. Stanton et al, 2009). In the field of geo-resources development, IAM has been used for example to quantify the engineering risk in shale gas development (Soeder et al, 2014).

IAM usually tries to link in a single modelling framework the main features of a system under analysis, taking into account the uncertainties in the modelling process. An IAM application to MHR assessment can be implemented to understand how a determined geologic or environmental system, of interest in a given BE (FT) or node (ET), behaves under determined conditions. Furthermore, it may rely on a combination of multiple data sources as numerical modelling or field measurements.

However, physical/stochastic modelling can be a time- and computationally expensive activity, constituting a limit to the implementation of physically based IAM in many practical applications. The use of expert judgement elicitation techniques is an alternative (or complementary) tool that is often used for evaluating rare or poorly understood phenomena.

The elicitation is the process of formally capturing judgement or opinion from a panel of recognised experts regarding a well-defined problem, relying on their combined training and expertise (Meyer and Booker, 1991; Cooke, 1991). Structured elicitation of expert judgement has been widely used for supporting probabilistic hazard and risk assessment in different contexts, for example for seismic hazard assessment (e.g. Budnitz et al, 1998), and volcanic hazards and risk (e.g. Aspinall, 2006).

The outcome of IAM, therefore, can be used to set the probability of a determined BE (or ET’s node) for which no direct data is available. An example of IAM of interest for MHR assessment applied to the development of geo-resources has been developed in the framework of the European project SHEER (Shale gas exploration and exploitation induced Risks), where IAM has been used to assess a risk pathway scenario in which the connectivity of rock fracture networks connecting two zones of interest is a BE of interest (for details see, Garcia-Aristizabal, 2017).

Needing help? Troubles? Feedback?

Contact us:

Alexander Garcia-Aristizabal.

Istituto Nazionale di Geofisica e Vulcabnologia, Sezione di Bologna.

Email: alexander.garcia@ingv.it

Citation

Please acknowledge use of this application in your work:

IS-EPOS. (2019). Merger [Web application]. Retrieved from https://tcs.ah-epos.eu/

- Garcia-Aristizabal, A., J. Kocot, R. Russo, and P. Gasparini (2019). A probabilistic tool for multi-hazard risk analysis using a bow-tie approach: application to environmental risk assessments for geo-resource development projects. Acta Geophys. 67, 385-410. DOI: 10.1007/s11600-018-0201-7 https://doi.org/10.1007/s11600-018-0201-7

References

Aspinall WP (2006) Structured elicitation of expert judgement for probabilistic hazard and risk assessment in volcanic eruptions. Statistics in Volcanology, DOI 10.1144/IAVCEI001

Bedford T, Cooke R (2001) Probabilistic risk analisys: foundations and methods. Cambridge University Press, Cambridge

Budnitz RJ, Apostolakis G, Boore DM, Lloyd C, Coppersmith KJ, Cornell A, Morris PA (1998) Use of Technical Expert Panels: Applications to Probabilistic Seismic Hazard Analysis*. Risk Analysis 18(4):463–469, DOI 10.1111/j.1539-6924.1998.tb00361.x, URL https://onlinelibrary.wiley.com/doi/abs/10.1111/j.1539-6924.1998.tb00361. x, https://onlinelibrary.wiley.com/doi/pdf/10.1111/j.1539-6924.1998.tb00361.x

Burby RJ (ed) (1998) Cooperating with Nature: Confronting Natural Hazards with Land-Use Planning for Sustainable Communities. The National Academies Press, Washington, DC

Carter A (1986) Mechanical reliability, 2nd edn. Macmillan, Great Britain

Cooke R (1991) Experts in Uncertainty. Opinion and Subjective Probability in Science. Environmental Ethics and Science Policy Series, Oxford University Press

Dasgupta A, Pecht M (1991) Material failure mechanisms and damage models. IEEE Transactions on Reliability 40(5):531–536

Ebeling C (1997) An Introduction to Reliability and Maintainability Engineering. McGraw-Hill, Boston

EERI Committee on Seismic Risk (1989) The Basics of Seismic Risk Analysis. Earthquake Spectra 5(4):675–702, DOI 10.1193/1.1585549, URL https://doi.org/10.1193/1.1585549

Ferdous R, Khan F, Veitch B, Amyotte P (2007) Methodology for Computer-Aided Fault Tree Analysis. Process Safety and Environmental Protection 85(1):70–80, DOI 10.1205/psep06002, URL http://www.sciencedirect.com/science/article/pii/S0957582007713885

Garcia-Aristizabal A (2017) Multi Multi-risk assessment in a test case. Tech. rep., Deliverable 7.3, H2020 SHEER (Shale gas exploration and exploitation induced Risks) project, Grant n. 640896.

Garcia-Aristizabal A (2018) Modelling fluid-induced seismicity rates associated with fluid injections: examples related to fracture stimulations in geothermal areas. Geophysical Journal International 215(1):471–493, DOI 10.1093/gji/ggy284, URL http://dx.doi.org/10.1093/gji/ ggy284

Garcia-Aristizabal A, Marzocchi W, Fujita E (2012) A Brownian model for recurrent volcanic eruptions: an application to Miyakejima volcano (Japan). Bulletin of Volcanology 74(2):545– 558, DOI 10.1007/s00445-011-0542-4, URL https://doi.org/10.1007/s00445-011-0542-4

Garcia-Aristizabal A, Bucchignani E, Palazzi E, D'Onofrio D, Gasparini P, Marzocchi W (2015) Analysis of non-stationary climate-related extreme events considering climate change scenarios: an application for multi-hazard assessment in the Dar es Salaam region, Tanzania. Natural Hazards 75(1):289–320, DOI 10.1007/s11069-014-1324-z, URL https://doi.org/10.1007/ s11069-014-1324-z

Garcia-Aristizabal A, Capuano P, Russo R, Gasparini P (2017) Multi-hazard risk pathway scenarios associated with unconventional gas development: Identification and challenges for their assessment. Energy Procedia 125:116–125, DOI 10.1016/j.egypro.2017.08.087, european Geosciences Union General Assembly 2017, EGU Division Energy, Resources & Environment (ERE)

Garcia-Aristizabal A, Kocot J, Russo R, Gasparini P (2019) A probabilistic tool for multi-hazard risk analysis using a bow-tie approach: application to environmental risk assessments for georesource development projects. Acta Geophysica (1):385–410

Gelman A, Carlin J, Stern H, Rubin D (1995) Bayesian data analysis. Chapman & Hall

Gould J, Glossop M (2000) New Failure Rates for Land Use Planning QRA: Update. Health and Safety Laboratory, report RAS/00/10

Hall P, Strutt J (2003) Probabilistic physics-of-failure models for component reliabilities using Monte Carlo simulation and Weibull analysis: a parametric study. Reliability Engineering & System Safety 80(3):233–242, DOI 10.1016/S0951-8320(03)00032-2, URL http://www. sciencedirect.com/science/article/pii/S0951832003000322

Iannacchione AT (2008) The Application of major hazard risk assessment MHRA to eliminate multiple fatality occurrences in the U.S. minerals industry. Tech. rep., National Institute for Occupational Safety and Health, Spokane Research Laboratory, URL https://lccn.loc.gov/ 2009285807

IS-EPOS (2018) EPISODES Platform User Guide. Tech. rep., (last accessed: May 2018), URL https://tcs.ah-epos.eu/eprints/1737

Kennedy R, Cornell C, Campbell R, Kaplan S, Perla H (1980) Probabilistic seismic safety study of an existing nuclear power plant. Nuclear Engineering and Design 59(2):315–338, DOI 10. 1016/0029-5493(80)90203-4, URL http://www.sciencedirect.com/science/article/pii/ 0029549380902034

Khakzad N, Khan F, Amyotte P (2012) Dynamic risk analysis using bow-tie approach. Reliability Engineering & System Safety 104:36–44, DOI 10.1016/j.ress.2012.04.003, URL http://www.sciencedirect.com/science/article/pii/S0951832012000695

Khakzad N, Khan F, Amyotte P (2013) Quantitative risk analysis of offshore drilling operations: A Bayesian approach. Safety Science 57:108–117

Khakzad N, Khakzad S, Khan F (2014) Probabilistic risk assessment of major accidents: application to offshore blowouts in the Gulf of Mexico. Natural Hazards 74(3):1759–1771

Leemis L (2009) Reliability. Probabilistic Models and Statistica Methods, 2nd edn. Library of Congress Cataloging-in-Publicaiton data, Lavergne, TN USA

Marzocchi W, Sandri L, Selva J (2008) BET EF: a probabilistic tool for long- and short-term eruption forecasting. Bulletin of Volcanology 70(5):623–632, DOI 10.1007/s00445-007-0157-y, URL https://doi.org/10.1007/s00445-007-0157-y

Melchers RE (1999) Structural Reliability Analysis and Prediction, 2nd edn. Wiley-Interscience, Great Britain

Meyer M, Booker J (1991) Eliciting and Analyzing Expert Judgement: A Practical Guide. Academic Press Limited, San Diego, CA.

Pope-Reid Associates I (1986) Hazardous waste tanks risk analysis. Tech. rep., prepared for the Office of Solid Waste, U. S. Environmental Protection Agency

Rao KD, Gopika V, Rao VS, Kushwaha H, Verma A, Srividya A (2009) Dynamic fault tree analysis using Monte Carlo simulation in probabilistic safety assessment. Reliability Engineering & System Safety 94(4):872–883, DOI 10.1016/j.ress.2008.09.007, URL http://www.sciencedirect.com/science/article/pii/S0951832008002354

Rausand M, Høyland A (2004) System Reliability Theory: Models, Statistical Methods and Applications. Wiley-Interscience, Hoboken, NJ

Selva J, Sandri L (2013) Probabilistic Seismic Hazard Assessment: Combining Cornell?Like Approaches and Data at Sites through Bayesian Inference. Bulletin of the Seismological Society of America 103(3):1709, DOI 10.1785/0120120091, URL http://dx.doi.org/10.1785/0120120091, /gsw/content\_public/journal/bssa/103/3/10.1785\_0120120091/3/1709.pdf

Siu NO, Kelly DL (1998) Bayesian parameter estimation in probabilistic risk assessment. Reliability Engineering & System Safety 62(1):89–116, DOI 10.1016/S0951-8320(97)00159-2, URL http://www.sciencedirect.com/science/article/pii/S0951832097001592

Soeder DJ, Sharma S, Pekney N, Hopkinson L, Dilmore R, Kutchko B, Stewart B, Carter K, Hakala A, Capo R (2014) An approach for assessing engineering risk from shale gas wells in the United States. International Journal of Coal Geology 126:4–19, DOI 10.1016/j.coal.2014.01.004

Stanton EA, Ackerman F, Kartha S (2009) Inside the integrated assessment models: Four issues in climate economics. Climate and Development 1(2):166–184, DOI 10.3763/cdev.2009.0015

Taheriyoun M, Moradinejad S (2014) Reliability analysis of a wastewater treatment plant using fault tree analysis and Monte Carlo simulation. Environmental Monitoring and Assessment 187(1):4186, DOI 10.1007/s10661-014-4186-7, URL https://doi.org/10.1007/s10661-014-4186-7

Yang M, Khan FI, Lye L (2013) Precursor-based hierarchical Bayesian approach for rare event frequency estimation: A case of oil spill accidents. Process Safety and Environmental Protection 91(5):333–342, DOI 10.1016/j.psep.2012.07.006, URL http://www.sciencedirect.com/science/article/pii/S0957582012000894

Yevkin O (2010) An improved monte carlo method in fault tree analysis. In: 2010 Proceedings Annual Reliability and Maintainability Symposium (RAMS), pp 1–5